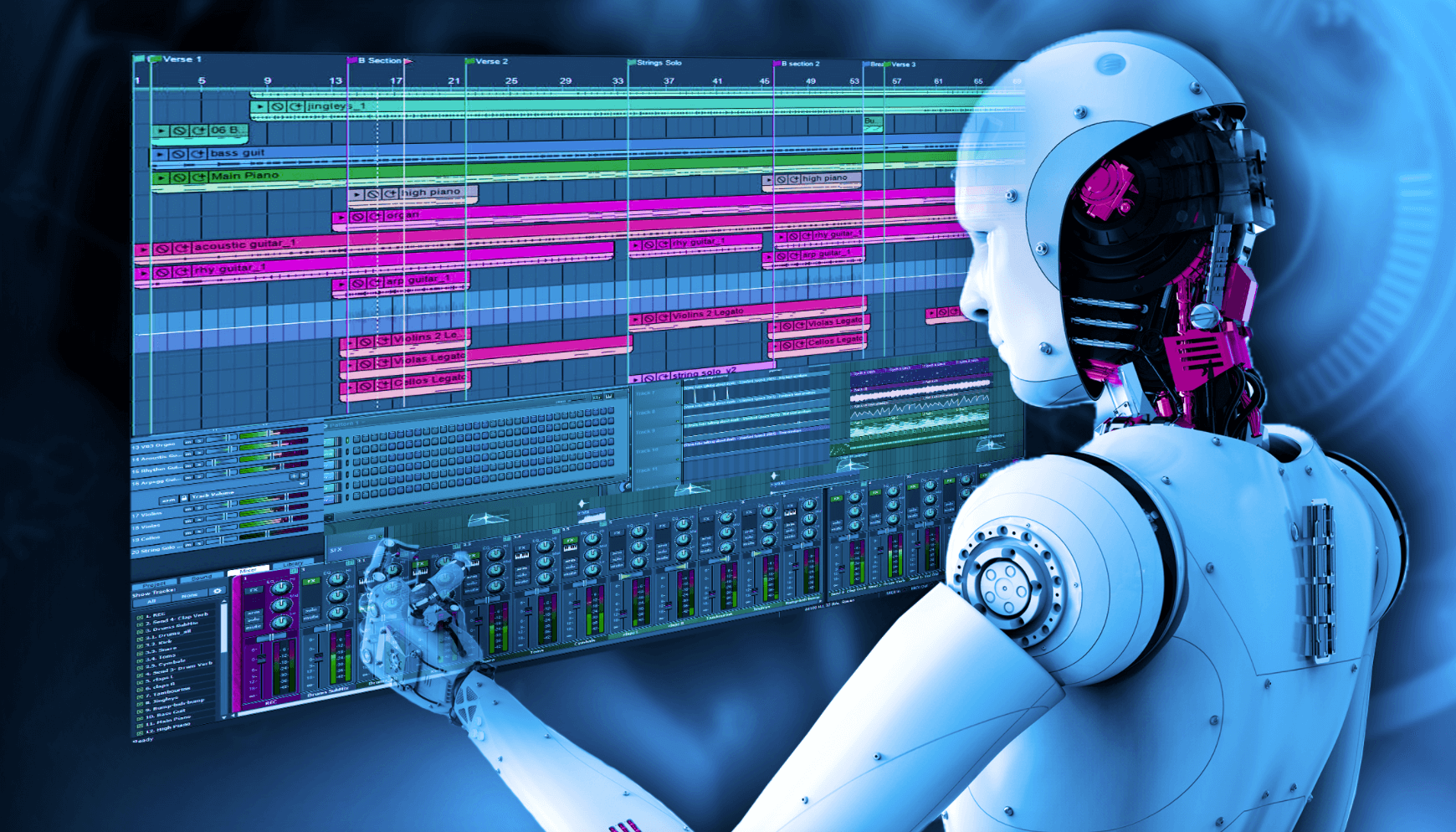

Artificial intelligence is being successfully applied to all phases of music production and consumption. Algorithms and machine learning systems are used to capture trends; compose songs and even complete albums; select and play instruments or imitate their sound and voice; mix and remix pieces; design distribution and exploitation strategies in advertising, video games, cinema, television, radio, etc. While it identify genres and styles as a human being would.

The comparison between the capabilities of computer programs and the skills of people is constant. At first, the opinions of professionals such as Pascal Pilon, head of the Canadian startup LANDR, who tried to settle the debate emphatically predominated: “I don’t think anyone wants to hear songs made by robots because music tells stories.” However, there are more and more experiences that seem to take away his reason: in writing, sound interpretation and execution; in the analysis and programming of large-scale content; in the recommendation and personalization to the detail of audios; etc.

This sector has always been receptive to technological innovations. Its actors have managed to integrate them to reach higher artistic levels. And the commercial dimension of this activity has not been the exception in this dynamism. Numerous agents have presented blockchain and big data as two key factors to keep the business in the future. The example has spread and lately, the main electronic festivals and contests have opened up to discuss these issues with maximum depth.

Within the framework of We are Europe, the association of eight reference cultural events in the continent, one of them, the C / O Pop in Cologne (Germany), hosts the 2018 edition, which is held between August 29 and on September 2, a session entitled: “Is artificial intelligence remodeling or destroying the music industry?” It involves AntòniaFolguera, of the Sónar + D; the researcher ÒscarCelma ; AI Music’s chief strategy officer, InderjitBirdee; and Rachel Falconer, of Goldsmiths Digital Studios.

Events of this nature are being revealed as optimal scenarios for companies to showcase their novelties, professionals share good practices and also for the general public to become familiar with the fundamentals of tools that they probably use often, although without really knowing about what bases are laid. This broad category includes social networks, virtual assistants, streaming services, media, groups and DJs, the festivals themselves and even the vehicles and other connected objects that constitute the internet of things.

Aware of the relevance and scope of this unstoppable movement, universities around the world are developing projects and experiments in this field, on their own or in collaboration with other institutions or companies, to take the transformation even further. Scientists from the Massachusetts Institute of Technology (United States) have recently created Pixel Player, a powerful software that, without manual supervision, locates videos without labeling regions of image associated with a specific sound and represents it with pixel accuracy. Hence its name.

It is a reality that the developments around artificial intelligence are growing in an impressive way, where we have already seen how he is able to win in Go, start conversations, fly a fighter plane, imitate our calligraphy and even be a news editor in the past Olympic Games; but now he has a new profession since he has just opened as a composer.

FlowMachines is a wonderful project born in the Sony CSL research laboratories, where a group of scientists have for the first time achieved an artificial intelligence system to compose an entire song, well, in this case there are two.

The musical hit

FlowMachines is a system that has been fed with more than 13,000 melodies, which are made up of a wide variety of musical styles, composers and songs, mainly jazz, pop, Brazilian music and Broadway works.

This means that by simply asking him to “compose” a melody he will be able to search his database and assemble the melody, this with everything and lyrics extracted from fragments of phrases within the same songs. However, the system is not yet able to work on its own, since it relies on a human composer who produces the song, selects the style and genre, and also writes the lyrics; with all this information, FlowMachines searches its database and shows us the results in a few minutes.

One song is called ‘Daddy’s Car’ and is the result of having asked him to compose something pop based on The Beatles. The second song is called ‘Mr. Shadow ‘and here he was asked to assemble something in the style of “the American composers”, from which fragments of songs and melodies were drawn from composers such as Cole Porter, Gershwin, Duke Ellington, among others.

The process to create the song still requires a lot of what is presented today in a recording studio, since in this case the human composer BenoîtCarré is the one who selects the style and a base melody with a tool known as FlowComposer , after From this, a tool called Rechord is used with which some pieces of audio generated by the system are matched with other melodies, this with the aim of finishing the production and effecting the final mixing.

These two songs are part of an album that went on sale in 2017, and is the first one that has been composed of an artificial intelligence system for commercialization.

Music is an intangible product where it is almost impossible to quantify its value. For example, unlike other products in which its price is based on the raw materials used and the man-hours invested in the product, music does not have these capabilities. A song recorded in a few hours with just a guitar and a microphone cannot be compared in effort, instruments and time to an entire orchestra piece. The latter may have taken more time and money to create and record, but perhaps it had less effect or impact on the audience. But nevertheless,

Taking into account how the music industry is positioned to adapt to technology and its commercialized business models, we know that if we want to change and improve the music industry, we have to bring real value to the source: musicians. They are constantly looking for ways to advance their professions sustainably and competitively. Sustainability in music can have many perspectives, but we believe that it begins with empowerment through education and technology.

Serve the right set of customers

Pivots within startups can occur as frequently as unpredictable as possible. In our case, after a few weeks of conversation with hundreds of music fans, musicians and industry professionals, we finally made the decision. We focus our energy to serve a particular group of clients: music students and emerging musicians. Musicians as a whole are a group of highly knowledgeable and creative people who spend most of their lives studying to stay ahead of their competition. They value the stories behind music scenes and the content we can provide thanks to technology. But one of its main pain points, in addition to feeling unattended when it comes to services, Music streaming and platforms, is the lack of options to connect and find relevant music information.

We unravel several pain points. For example, musicians are by default avid music listeners: they depend on discovering and listening to new music. Sometimes, for more than 12 hours every day. However, they find what we call music information overload. Multiple discovery options created for the passive music listener: the average user who wants to press play and follow their usual routine.

Part of the challenge that these musicians have is to have to balance the time they spend practicing and learning, with the commercialization of their music and preparation for professional life. Music education and immediate access to reliable content are best for them. They need to advance as quickly as possible in their careers with the least possible effort.

Artificial Intelligence in Music: A Data Based Approach

We believe that to help musicians and music students advance their careers, we need to democratize this knowledge. By doing so, technology can help scale this process and customize the knowledge of each of these musicians. Existing theories of artificial intelligence are far from complete, and music education often emphasizes other factors that are not the communication of knowledge to students, concerning the previous point.

Going back to the first iterations of artificial intelligence applied to music education in 1970, there were three basic components focused on the domain experience (imagine a teacher who knows absolutely everything about a single aspect of music); student model (know all about the set of skills and weaknesses of a student); and teaching (imagine a person who knows several teaching methods and uses the best depending on the situation).

If we think about the available data, we can think that a fourth more robust component of this equation would be the context. Traditionally, AI has been applied to musical composition, but although musicians are reluctant to use this technology, we believe that AI must provide better tools for musicians to advance in their creative process. At Penn State University, a virtual reality classroom was created with artificially intelligent students, to teach apprentices to practice with students. This is another example of how AI has evolved and has been used in music education.

At the moment, part of our efforts is to provide value and knowledge about music in general from the points of view of artists. We have launched our Content Hub that serves precisely that purpose. While we continue to obtain additional customer feedback and the value they obtain from our product, we will implement new advanced technology features.